The plug-ins will enable companies to offer the AI-based natural language processor for their products, allowing users to ask detailed questions and get human-like answers.

OpenAI, the Microsoft-backed company that developed ChatGPT, on Thursday announced support for plug-ins designed to allow companies to more easily embed chatbot functionality into their products.

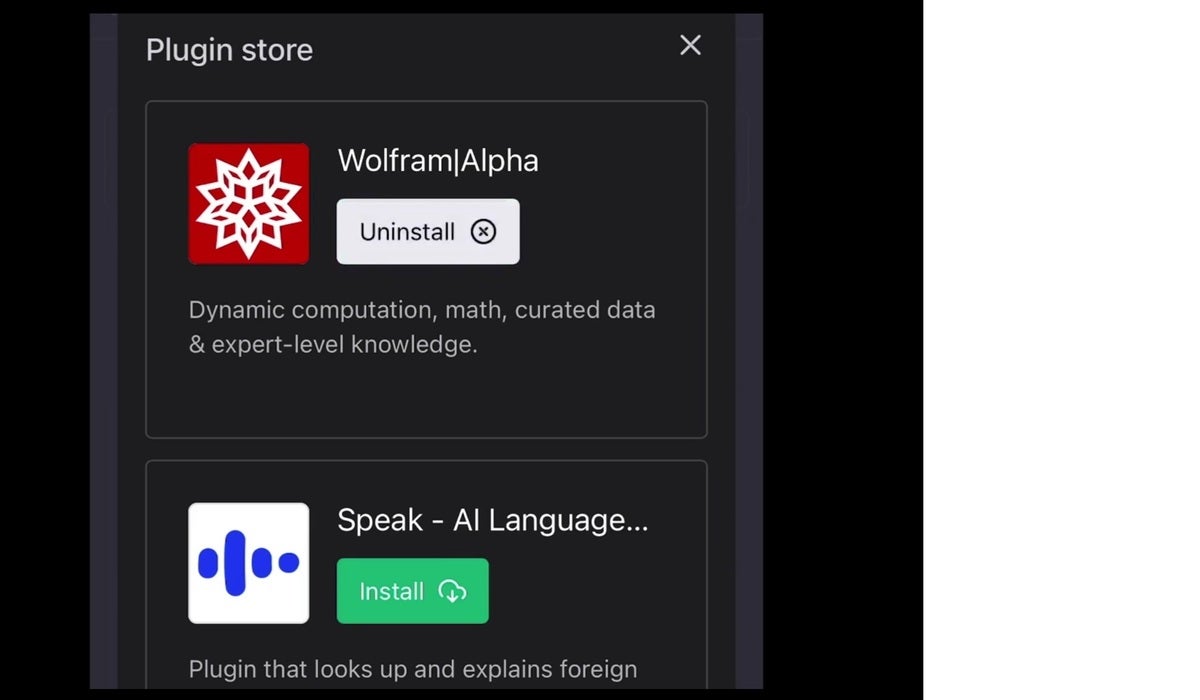

The first plug-ins have been already been created by Expedia, FiscalNote, Instacart, KAYAK, Klarna, Milo, OpenTable, Shopify, Slack, Speak, Wolfram, and Zapier, OpenAI said.

“We are gradually rolling out plug-ins in ChatGPT so we can study their real-world use, impact, and safety and alignment challenges — all of which we’ll have to get right in order to achieve our mission,” San Francisco-based OpenAI said in a blog post.

The OpenAI plug-ins are tools designed specifically for language models that help ChatGPT access up-to-date information, run computations, or use third-party services.

The plug-ins enables for the first time ChatGPT Plus to access live web data versus information the large language model was already trained on.

Users can click on and upload any number of the current plug-ins offered. For example, users of grocery delivery service Instacart can upload the ChatGPT plug-ins and begin using the natural language processor to ask it for things such as restaurant recommendations, recipes, ingredients for a meal, and the total calorie count of that meal.

An example of a question might be: “I’m looking for vegan food in San Francisco this weekend. Can you offer me a great restaurant suggestion for Saturday, and also a simple meal recipe for Sunday (just the ingredients)? Please calculate the calories for the recipe using WolframAlpha.”

“Users have been asking for plug-ins since we launched ChatGPT (and many developers are experimenting with simlar ideas) because they unlock a vast range of possible use cases,” OpenAI said.

The generative AI developer said it’s planning to gradually roll out larger-scale access as it learns more from plug-in developers, ChatGPT users, and after an alpha period.

Arun Chandrasekaran, a distingushed vice president analyst with Gartner, said one of the challenges with applications such as ChatGPT and their underlying AI models (such as GPT-4) is their static nature; that arises from the gap between training cut-off dates for companies and actual application model release dates.

“Through a plug-in ecosystem, ChatGPT could now handle more real-time information from a set of curated sources,” Chandrasekaran said. “They are announcing both first party plug-ins, which allows ChatGPT to connect to internet sources for providing more up-to-date information, as well as third-party sources such as Expedia, OpenTable. On the flip side, this also increases the attack surface and potentially more latency domains in the architecture.”

OpenAI recognized the “significant new risks” associated with enabling external tools through ChatGPT.

“Plugins offer the potential to tackle various challenges associated with large language models, including “hallucinations,” keeping up with recent events, and accessing (with permission) proprietary information sources,” OpenAI said.

The company said it only allowed a limited number of plug-in developers who’d been on a waitlist to have access to documentation they could use to build a plug-in for ChatGPT. That way, OpenAI can monitor any adverse affects from the plug-ins.

Cybersecurity company GreyNoise.io, for example, warned of a known vulnerability associated with MinIO’s object storage platform in a blog post Friday.

Developers using the MinIO who want to integrate their plugins are encouraged to upgrade to a patched version of MinIO, the blog recommended. “Your endpoint will eventually get popped if you do not update the layer to the latest MinIO,” the company said.

While application developers have already been able to use ChatGPT’s API to tailor it for use with products, plug-ins will make the task far simpler, said Dr. Chirag Shah, professor at the Information School at the University of Washington.

“APIs require technical knowhow. Just as with social media, there are other ways to access services through subscriptions. Plug-ins make it easy for people to deploy ChatGPT without a lot of effort,” Shah said. “They won’t work for every company. They are targeted at a particular audience.”

OpenAI admitted its GPT large language model (LLM) is limited in what it can do today because it has not been trained with all the latest up-to-date information for the myriad of applications on the web. For example, the LLM computer algorithm on which it’s trained has billions of parameters, but they’re not specific to what Expedia, for example, might want its users to access.

Currently, the only way for GPT-4 to learn is from training data input by a user organization. For example, if a bank wanted to use ChatGPT for its internal workers and external customers, it would need to ingest information about the company so that when users ask questions of the chatbot, it could provide bank-specific answers.

The plug-ins make it easier for ChatGPT’s LLM to access company information specific to products, details that otherwise could be too recent, too personal, or too specific to be included in the training data.

“In response to a user’s explicit request, plug-ins can also enable language models to perform safe, constrained actions on their behalf, increasing the usefulness of the system overall,” OpenAI said. “We expect that open standards will emerge to unify the ways in which applications expose an AI-facing interface. We are working on an early attempt at what such a standard might look like, and we’re looking for feedback from developers interested in building with us.”