In a recent study published in npj Digital Medicine, researchers evaluated ChatGPT's ability to extract structured data from unstructured clinical notes.

Study: A critical assessment of using ChatGPT for extracting structured data from clinical notes. Image Credit: TippaPatt / Shutterstock.com

Study: A critical assessment of using ChatGPT for extracting structured data from clinical notes. Image Credit: TippaPatt / Shutterstock.com

AI in medicine

Large-language-based models (LLMs), including Generative Pre-trained Transformer (GPT) artificial intelligence (AI) models like ChatGPT, are used in healthcare to improve patient-clinician communication.

Traditional natural language processing (NLP) approaches like deep learning require problem-specific annotations and model training. However, the lack of human-annotated data, combined with the expenses associated with these models, makes building these algorithms difficult.

Thus, LLMs like ChatGPT provide a viable alternative by relying on logical reasoning and knowledge to aid language processing.

About the study

In the present study, researchers create an LLM-based method for extracting structured data from clinical notes and subsequently converting unstructured text into structured and analyzable data. To this end, the ChatGPT 3.50-turbo model was used, as it is associated with specific Artificial General Intelligence (AGI) capabilities.

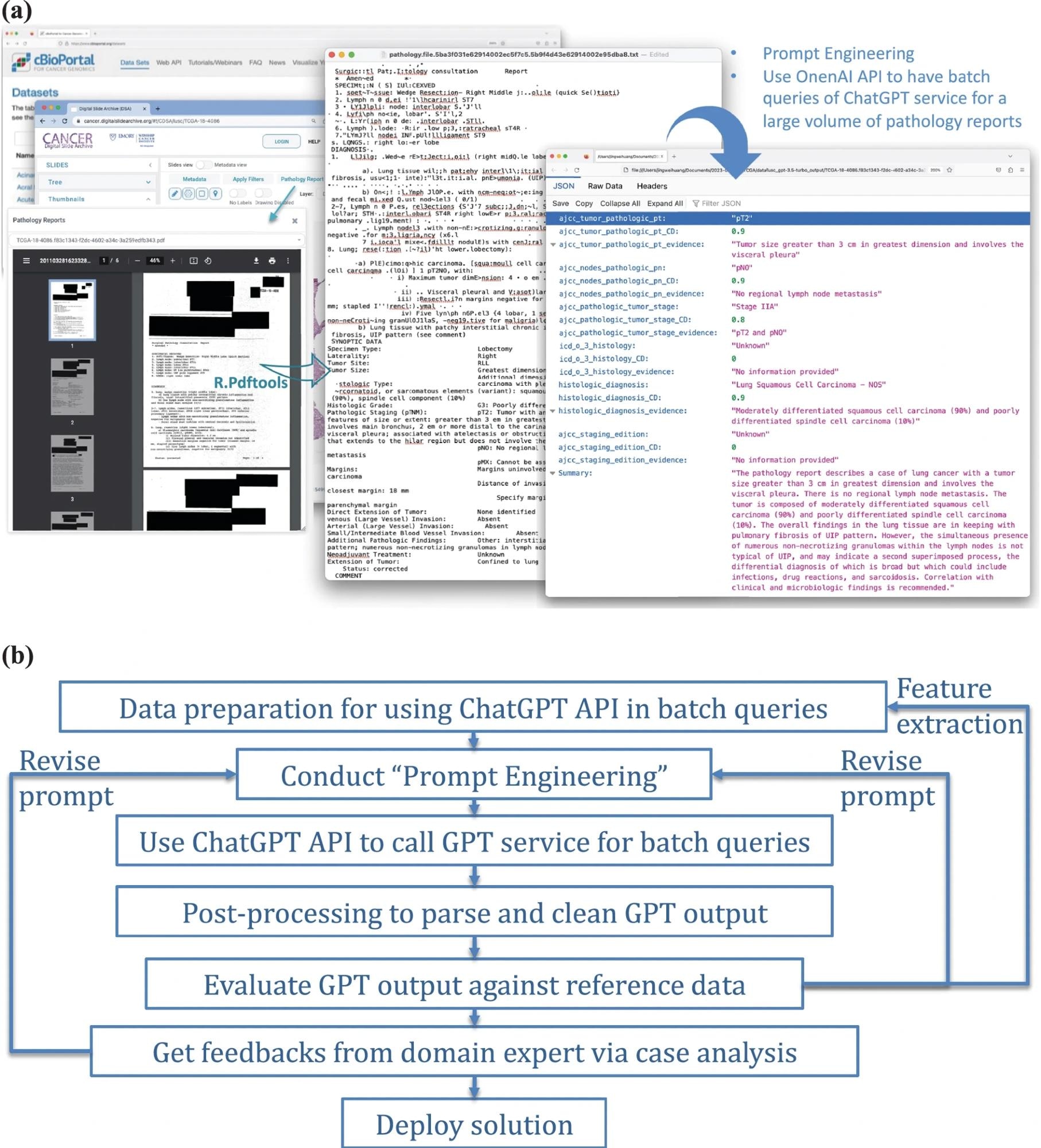

An overview of the process and framework of using ChatGPT for structured data extraction from pathology reports. a Illustration of the use of OpenAI API for batch queries of ChatGPT service, applied to a substantial volume of clinical notes — pathology reports in our study. b A general framework for integrating ChatGPT into real-world applications.

A total of 1,026 lung tumor pathology reports and 191 pediatric osteosarcoma reports from the Cancer Digital Slide Archive (CDSA), which served as the training set, as well as the Cancer Genome Atlas (TCGA), which served as the testing set, were transformed to text using R program. Text data was subsequently analyzed using the OpenAI API, which extracted structured data based on specific prompts.

ChatGPT API was used to perform batch queries, followed by prompt engineering to call the GPT service. Post-processing involved parsing and cleaning GPT output, evaluating GPT outcomes against reference data, and obtaining feedback from domain experts. These processes aimed to extract information on TNM staging and histology type as structured attributes from unstructured pathology reports. Tasks assigned to ChatGPT included estimating targeted attributes, evaluating certainty levels, identifying key evidence, and generating a summary.

From the 99 reports acquired from the CDSA database, 21 were excluded due to low scanning quality, near-empty data content, or missing reports. This led to a total of 78 genuine pathology reports used to train the prompts. To assess model performance, 1,024 pathology reports were obtained from cBioPortal, 97 of which were eliminated due to overlapping with training data.

ChatGPT was directed to utilize the seventh edition of the American Joint Committee on Cancer (AJCC) Cancer Staging Manual for reference. Data analyzed included primary tumor (pT) and lymph node (pN) staging, histological type, and tumor stage. The performance of ChatGPT was compared to that of a keyword search algorithm and deep learning-based Named Entity Recognition (NER) approach.

A detailed error analysis was conducted to identify the types and potential reasons for misclassifications. The performance of GPT version 3.50-Turbos and GPT-4 were also compared.

Study findings

ChatGPT version 3.50 achieved 89% accuracy in extracting pathological classifications from the lung tumor dataset, thus outperforming the keyword algorithm and NER Classified, which had accuracies of 0.9, 0.5, and 0.8, respectively. ChatGPT also accurately classified grades and margin status in osteosarcoma reports, with an accuracy rate of 98.6%.

Model performance was affected by the instructional prompt design, with most misclassifications due to a lack of specific pathology terminologies and improper TNM staging guideline interpretations. ChatGPT accurately extracted tumor information and used AJCC staging guidelines to estimate tumor stage; however, it often used incorrect rules to distinguish pT categories, such as interpreting a maximum tumor dimension of two centimeters as T2.

In the osteosarcoma dataset, ChatGPT version 3.50 precisely classified margin status and grades with an accuracy of 100% and 98.6%, respectively. ChatGPT-3.50 also performed consistently over time in pediatric osteosarcoma datasets; however, it frequently misclassified pT, pN, histological type, and tumor stage.

Tumor stage classification performance was assessed using 744 instances with accurate reports and reference data, 22 of which were due to error propagation, whereas 34 were due to improper regulations. Assessing the classification performance of histological diagnosis using 762 instances showed that 17 cases were unknown or had no output, thereby yielding a coverage rate of 0.96.

The initial model evaluation and prompt-response review identified unusual instances, such as blank, improperly scanned, or missing report forms, which ChatGPT failed to detect in most cases. GPT-4-turbo outperformed the previous model in almost every category, thereby improving this model's performance by over 5%.

Conclusions

ChatGPT appears to be capable of handling massive clinical note volumes to extract structured data without requiring considerable task-based human annotation or model data training. Taken together, the study findings highlight the potential of LLMs to convert unstructured-type healthcare information into organized representations, which can ultimately facilitate research and clinical decisions in the future.

Journal reference:

- Huang, J., Yang, D.M., Rong, R., et al. (2024). A critical assessment of using ChatGPT for extracting structured data from clinical notes. npj Digital Medicine 7(106). doi:10.1038/s41746-024-01079-8