AI hallucinations are getting worse

And no one knows why it is happening

As generative artificial intelligence has become increasingly popular, the tool sometimes fudges the truth. These lies, or hallucinations as they are known in the tech industry, have ameliorated as companies improve the tools' functionality. But the most recent models are bucking that trend by hallucinating more frequently.

New reasoning models are on quite the trip

In the years since the arrival of ChatGPT and increased AI bot integration into an array of tasks, there is still "no way of ensuring that these systems produce accurate information," The New York Times said. Today's AI bots "do not — and cannot — decide what is true and what is false." Lately, the hallucination problem seems to be getting worse as the technologies become more powerful. Reasoning models, considered the "newest and most powerful technologies" from the likes of OpenAI, Google and the Chinese start-up DeepSeek, are "generating more errors, not fewer." The models' math skills have "notably improved," but their "handle on facts has gotten shakier." It is "not entirely clear why."

Reasoning models are a type of large language model (LLM) designed to perform complex tasks. Instead of "merely spitting out text based on statistical models of probability", reasoning models "break questions or tasks down into individual steps akin to a human thought process," said PC Gamer. During tests of its latest OpenAI reasoning systems, the company found that its o3 system hallucinated 33% of the time when running its PersonQA benchmark test, which involves answering questions about public figures. That is more than twice the hallucination rate of OpenAI's previous reasoning system, o1. The latest tool, o4-mini, hallucinated at an even higher rate of 48%.

Subscribe to The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

OpenAI has pushed back against the notion that reasoning models suffer from increased rates of hallucination and said more research is needed to understand the findings. Hallucinations are not "inherently more prevalent in reasoning models." Nonetheless, OpenAI is "actively working to reduce the higher rates of hallucination we saw in o3 and o4-mini," said company spokesperson Gaby Raila to the Times.

Too many 'unwanted robot dreams'

Hallucinations, to some experts, seem inherent to the technology. Despite companies' best efforts, AI "will always hallucinate," said Amr Awadallah, the chief executive of AI startup Vectara and former Google executive, to the Times. "That will never go away."

Still, hallucinations cause a "serious issue for anyone using the technology with court documents, medical information or sensitive business data," said the Times. "You spend a lot of time trying to figure out which responses are factual and which aren't," said Pratik Verma, cofounder and chief executive of Okahu, a company that helps businesses navigate hallucination problems. Not dealing with these errors "eliminates the value of AI systems, which are supposed to automate tasks."

Companies are "struggling to nail down why exactly chatbots are generating more errors than before" — a struggle that "highlights the head-scratching fact that even AI's creators don't quite understand how the tech actually works," said Futurism. The recent troubling hallucination trend "challenges the industry's broad assumption that AI models will become more powerful and reliable as they scale up."

Whatever the truth, AI models need to "largely cut out the nonsense and lies if they are to be anywhere near as useful as their proponents currently envisage," said PC Gamer. It is already "hard to trust the output of any LLM," and almost all data "has to be carefully double checked." That is fine for some tasks, but when the objective is "saving time or labor," the need to "meticulously proof and fact-check AI output does rather defeat the object of using them." It is unclear whether OpenAI and the rest of the LLM industry will "get a handle on all those unwanted robot dreams."

Sign up for Today's Best Articles in your inbox

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Theara Coleman has worked as a staff writer at The Week since September 2022. She frequently writes about technology, education, literature and general news. She was previously a contributing writer and assistant editor at Honeysuckle Magazine, where she covered racial politics and cannabis industry news.

-

Learning loss: AI cheating upends education

Learning loss: AI cheating upends educationFeature Teachers are questioning the future of education as students turn to AI for help with their assignments

-

Why Iranian cities are banning dog walking

Why Iranian cities are banning dog walkingUnder The Radar Our four-legged friends are a 'contentious topic' in the Islamic Republic

-

Andrea Long Chu's 6 favorite books for people who crave new ideas

Andrea Long Chu's 6 favorite books for people who crave new ideasFeature The book critic recommends works by Rachel Cusk, Sigmund Freud, and more

-

Learning loss: AI cheating upends education

Learning loss: AI cheating upends educationFeature Teachers are questioning the future of education as students turn to AI for help with their assignments

-

AI: Will it soon take your job?

AI: Will it soon take your job?Feature AI developers warn that artificial intelligence could eliminate half of all entry-level jobs within five years

-

The rise of 'vibe coding'

The rise of 'vibe coding'In The Spotlight Silicon Valley rush to embrace AI tools that allow anyone to code and create software

-

Bitcoin braces for a quantum computing onslaught

Bitcoin braces for a quantum computing onslaughtIN THE SPOTLIGHT The cryptocurrency community is starting to worry over a new generation of super-powered computers that could turn the digital monetary world on its head.

-

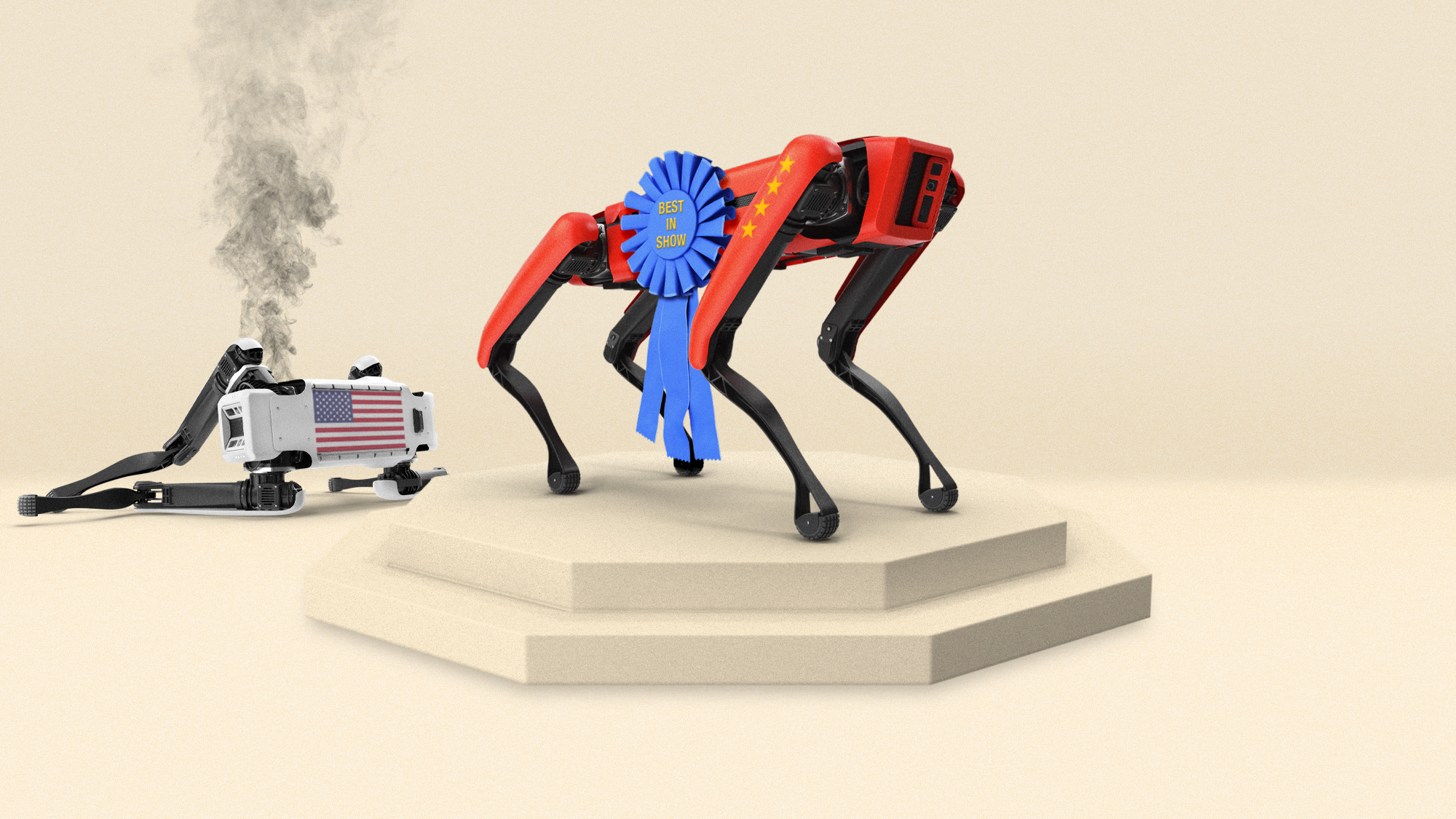

Is China winning the AI race?

Is China winning the AI race?Today's Big Question Or is it playing a different game than the US?

-

Google's new AI Mode feature hints at the next era of search

Google's new AI Mode feature hints at the next era of searchIn the Spotlight The search giant is going all in on AI, much to the chagrin of the rest of the web

-

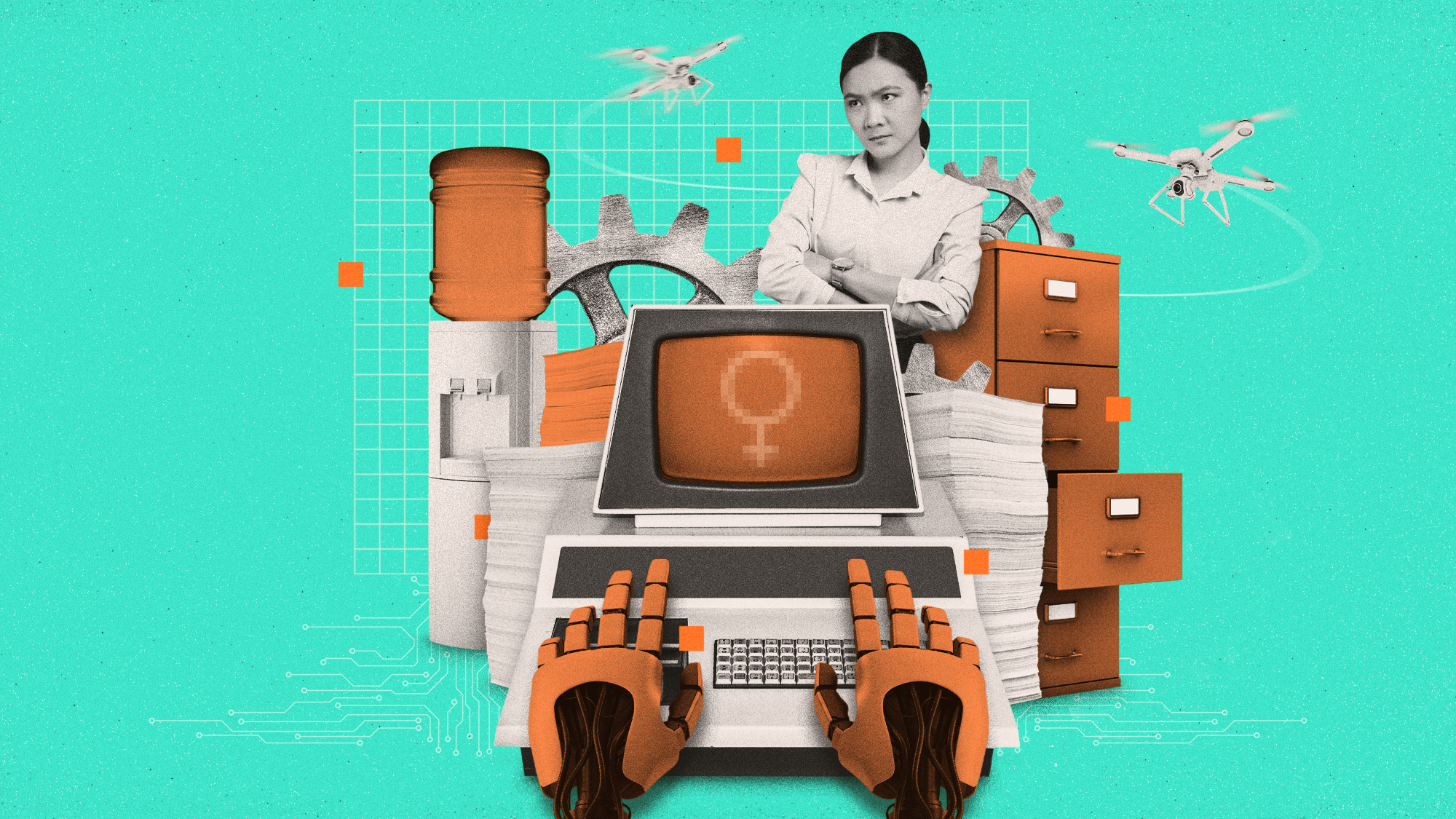

How the AI takeover might affect women more than men

How the AI takeover might affect women more than menThe Explainer The tech boom is a blow to gender equality

-

Did you get a call from a government official? It might be an AI scam.

Did you get a call from a government official? It might be an AI scam.The Explainer Hackers may be using AI to impersonate senior government officers, said the FBI