There exist, on the internet, any number of videos that show people doing things they never did. Real people, real faces, close to photorealistic footage; entirely unreal events.

These videos are called deepfakes, and they’re made using a particular kind of AI. Inevitably enough, they began in porn – there is a thriving online market for celebrity faces superimposed on porn actors’ bodies – but the reason we’re talking about them now is that people are worried about their impact on our already fervid political debate. Those worries are real enough to prompt the British government and the US Congress to look at ways of regulating them.

The video that kicked off the sudden concern last month was, in fact, not a deepfake at all. It was a good old-fashioned doctored video of Nancy Pelosi, the speaker of the US House of Representatives. There were no fancy AIs involved; the video had simply been slowed down to about 75% of its usual speed, and the pitch of her voice raised to keep it sounding natural. It could have been done 50 years ago. But it made her look convincingly drunk or incapable, and was shared millions of times across every platform, including by Rudi Giuliani – Donald Trump’s lawyer and the former mayor of New York.

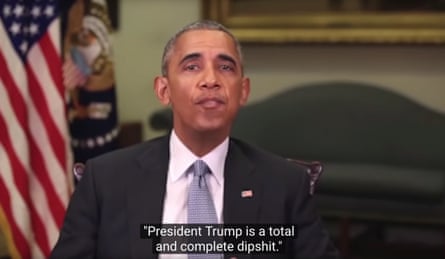

It got people worrying about fake videos in general, and deepfakes in particular. Since the Pelosi video came out, a deepfake of Mark Zuckerberg apparently talking about how he has “total control of billions of people’s stolen data” and how he “owe[s] it all to Spectre”, the product of a team of satirical artists, went viral as well. Last year, the Oscar-winning director Jordan Peele and his brother-in-law, BuzzFeed CEO Jonah Peretti, created a deepfake of Barack Obama apparently calling Trump a “complete and utter dipshit” to warn of the risks to public discourse.

A lot of our fears about technology are overstated. For instance, despite worries about screen time and social media, in general, high-quality research shows that there’s little evidence of it having a major impact on our mental health. Every generation has its techno-panic: video nasties, violent computer games, pulp novels.

But, says Sandra Wachter, a professor in the law and ethics of AI at the Oxford Internet Institute, deepfakes might be a different matter. “I can understand the public concern,” she says. “Any tech developing so quickly could have unforeseen and unintended consequences.” It’s not that fake videos or misinformation are new, but things are changing so fast, she says, that it’s challenging our ability to keep up. “The sophisticated way in which fake information can be created, how fast it can be created, and how endlessly it can be disseminated is on a different level. In the past, I could have spread lies, but my range was limited.”

Here’s how deepfakes work. They are the product of not one but two AI algorithms, which work together in something called a “generative adversarial network”, or Gan. The two algorithms are called the generator and the discriminator.

Imagine a Gan that has been designed to create believable spam emails. The discriminator would be exactly the same as a real spam filter algorithm: it would simply sort all emails into either “spam” or “not spam”. It would do that by being given a huge folder of emails, and determining which elements were most often associated with the ones it was told were spam: perhaps words like “enlarger” or “pills” or “an accident that wasn’t your fault”. That folder is its “training set”. Then, as new emails came in, it would give each one a rating based on how many of these features it detected: 60% likely to be spam, 90% likely, and so on. All emails above a certain threshold would go into the spam folder. The bigger its training set, the better it gets at establishing real from fake.

But the generator algorithm works the other way. It takes that same dataset and uses it to build new emails that don’t look like spam. It knows to avoid words like “penis” or “won an iPad”. And when it makes them, it puts them into the stream of data going through the discriminator. The two are in competition: if the discriminator is fooled, the generator “wins”; if it isn’t, the discriminator “wins”. And either way, it’s a new piece of data for the Gan. The discriminator gets better at telling fake from real, so the generator has to get better at creating the fakes. It is an arms race, a self-reinforcing cycle. This same system can be used for creating almost any digital product: spam emails, art, music – or, of course, videos.

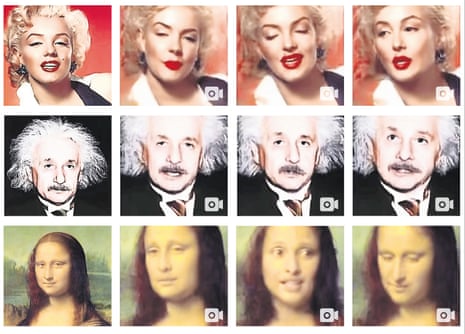

Gans are hugely powerful, says Christina Hitrova, a researcher in digital ethics at the Alan Turing Institute for AI, and have many interesting uses – they’re not just for creating deepfakes. The photorealistic imaginary people at ThisPersonDoesNotExist.com are all created with Gans. Discriminatory algorithms (such as spam filters) can be improved by Gans creating ever better things to test them with. It can do amazing things with pictures, including sharpening up fuzzy ones or colourising black-and-white ones. “Scientists are also exploring using Gans to create virtual chemical molecules,” says Hitrova, “to speed up materials science and medical discoveries: you can generate new molecules and simulate them to see what they can do.” Gans were only invented in 2014, but have already become one of the most exciting tools in AI.

But they are widely available, easy to use, and increasingly sophisticated, able to create ever more believable videos. “There’s some way to go before the fakes are undetectable,” says Hitrova. “For instance, with CGI faces, they haven’t quite perfected the generation of teeth or eyes that look natural. But this is changing, and I think it’s important that we explore solutions – technological solutions, and digital literacy solutions, as well as policy solutions.”

With Gans, one technological solution presents itself immediately: simply use the discriminator to tell whether a given video is fake. But, says Hitrova, “Obviously that’s going to feed into the fake generator to produce even better fakes.” For instance, she says, one tool was able to identify deepfakes by looking at the pattern of blinking. But then the next generation will take that into account, and future discriminators will have to use something else. The arms race that goes on inside Gans will go on outside, as well.

Other technological solutions include hashing – essentially a form of digital watermarking, giving a video file a short string of numbers which is lost if the video is tampered with – or, controversially, “authenticated alibis”, wherein public figures constantly record where they are and what they’re doing, so that if a deepfake circulates apparently showing them doing something they want to disprove, they can show what they were really doing. That idea has been tentatively floated by the AI law specialist Danielle Citron, but as Hitrova points out, it has “dystopian” implications.

None of these solutions can entirely remove the risk of deepfakes. Some form of authentification may work to tell you that certain things are real, but what if someone wants to deny the reality of something real? If there had been deepfakes in 2016, says Hitrova, “Trump could have said, ‘I never said ‘grab them by the pussy’.” Most would not have believed him – it came from Access Hollywood tapes and was confirmed by the show’s presenter – but it would have given an excuse for people to doubt them.

Education – critical thinking and digital literacy – will be important too. Finnish children score highly on their ability to spot fake news, a trait that is credited to the country’s policy of teaching critical thinking skills at school. But that can only be part of the solution. For one thing, most of us are not at school. Even if the current generation of schoolchildren becomes more wary – as they naturally are anyway, having grown up with digital technology – their elders will remain less so, as can be seen in the case of British MPs being fooled by obvious fake tweets. “Older people are much less tech-savvy,” says Hitrova. “They’re much more likely to share something without fact-checking it.”

Wachter and Hitrova agree that some sort of regulatory framework will be necessary. Both the US and the UK are grappling with the idea. At the moment, in the US, social media platforms are not held responsible for their content. Congress is considering changing that, and making such immunity dependent on “reasonable moderation practices”. Some sort of requirement to identify fake content has also been floated.

Wachter says that something like copyright, by which people have the right for their face not to be used falsely, may be useful, but that by the time you’ve taken down a deepfake, the reputational damage may already be done, so preemptive regulation is needed too.

A European Commission report two weeks ago found that digital disinformation was rife in the recent European elections, and that platforms are failing to take steps to reduce it. Facebook, for instance, has entirely washed its hands of responsibility for fact-checking, saying that it will only take down fake videos after a third-party fact-checker has declared it to be false.

Britain, though, is taking a more active role, says Hitrova. “The EU is using the threat of regulation to force platforms to self-regulate, which so far they have not,” she says. “But the UK’s recent online harms white paper and the Department for Digital, Culture, Media and Sport subcommittee [on disinformation, which has not yet reported but is expected to recommend regulation] show that the UK is really planning to regulate. It’s an important moment; they’ll be the first country in the world to do so, they’ll have a lot of work – it’s no simple task to balance fake news against the rights to parody and art and political commentary – but it’s truly important work.” Wachter agrees: “The sophistication of the technology calls for new types of law.”

In the past, as new forms of information and disinformation have arisen, society has developed antibodies to deal with them: few people would be fooled by first world war propaganda now. But, says Wachter, the world is changing so fast that we may not be able to develop those antibodies this time around – and even if we do, it could take years, and we have a real problem to sort out right now. “Maybe in 10 years’ time we’ll look back at this stuff and wonder how anyone took it seriously, but we’re not there now.”

Comments (…)

Sign in or create your Guardian account to join the discussion